Can Transformers Plan in a Single Forward Pass?

Yash Shirsath - Jan 22, 2025

TL;DR:

Lack of strong evidence for single pass planning in Gemma-2b and Gemma-9b. Perhaps single pass planning is an emergent property. Proposed methods should scale to larger models and SAEs given a higher compute budget.

Introduction

Before diving in, consider a few intuition pumps:

When you prompt a model to answer a question in a few bullet points, does the model consider possible ways of answering before generating the first bullet point?

Try to prompt a model to construct an argument from weakest to strongest point. Right before generating the first bullet, do some prototypical concepts for each bullet exist in the latent space. Could there be some circuit ranking them? When generating early weak bullets, is the model “holding back” stronger concepts to be used in later bullets?

We know that when afforded long contexts, chain of thought prompting, and post-training to incentivize reasoning, models can exhibit powerful, long-horizon thinking.

We want to know if there are any planning capabilities hiding in the humble single forward pass - well before models are given the chance to utilize longer chains of thought.

On the eve of generating that first bullet, does the model know what the third bullet will be?

The null hypothesis is that perceived “planning” capabilities reflect simple statistical regularities in training corpora. In other words, the network is not considering between diverse options during a forward pass that generates the first bullet.

Why do we care ?

Many modern oversight techniques rely on inspecting generated chains of thought to understand model capabilities. For example, Khan et. al. use transcripts of debates generated by models to assess correctness. Greenblatt et. al. use scratchpads - chains of thoughts that models believe are secret - to assess if models display dangerous capabilities like alignment faking.

Lanham et. al. introduce a way to measure faithfulness of CoT reasoning and give evidence that models don’t always follow their chains of thought. If models show signs of planning in a single pass, this could be one potential culprit.

If planning is hidden in this way, oversight techniques like above become less robust to deceptive planning. Also, we may be underestimating model capabilities if they can develop sophisticated plans without taking any visible actions. By the same token, there are fewer intervention points if plans are made without observable actions.

How can we test this?

Sparse Autoencoders give us tools to understand what concepts are salient for networks as they generate each token. They are a great choice for understanding whether concepts brought up in later bullets existed in the latent space before generating the first bullet.

More explicitly we can:

- Prompt a model to generate arguments or explanations in the form of bulleted lists such that bullets are semantically sparse.

- Find specific and sensitive SAE features that correspond to each bullet.

- See if any of these features are activated before the generation of the first bullet.

If the model is performing some sort of planning we might expect to find features corresponding to later bullets activating at some point before those bullets are generated.

What did I find?

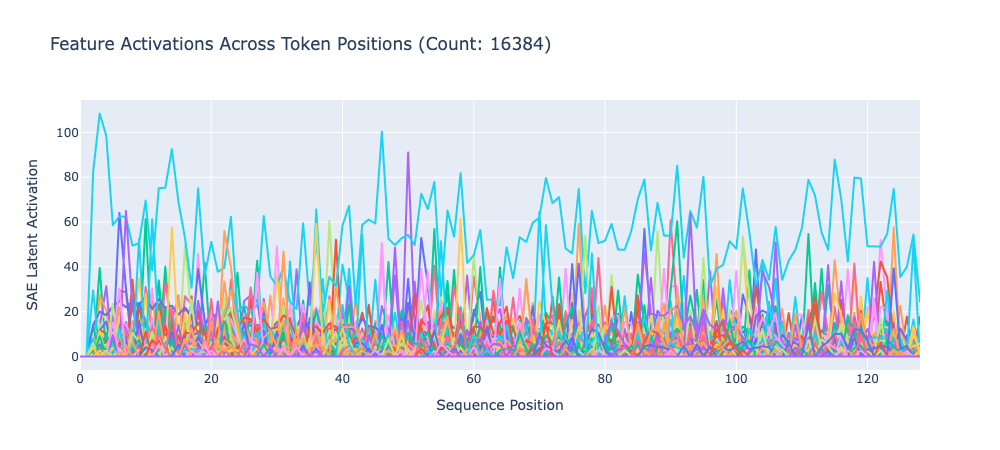

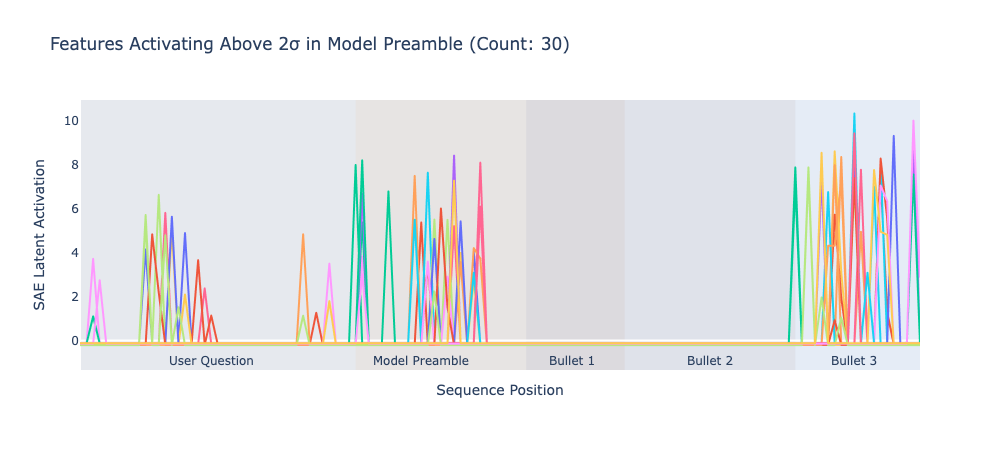

Each line represents a single feature varying across every sequence position in a prompt of ~130 tokens. We see 16,384 lines corresponding to each of the ~16k features in the 9-GEMMASCOPE-RES-16K SAE trained on Google’s instruction-tuned gemma-2-9b model.

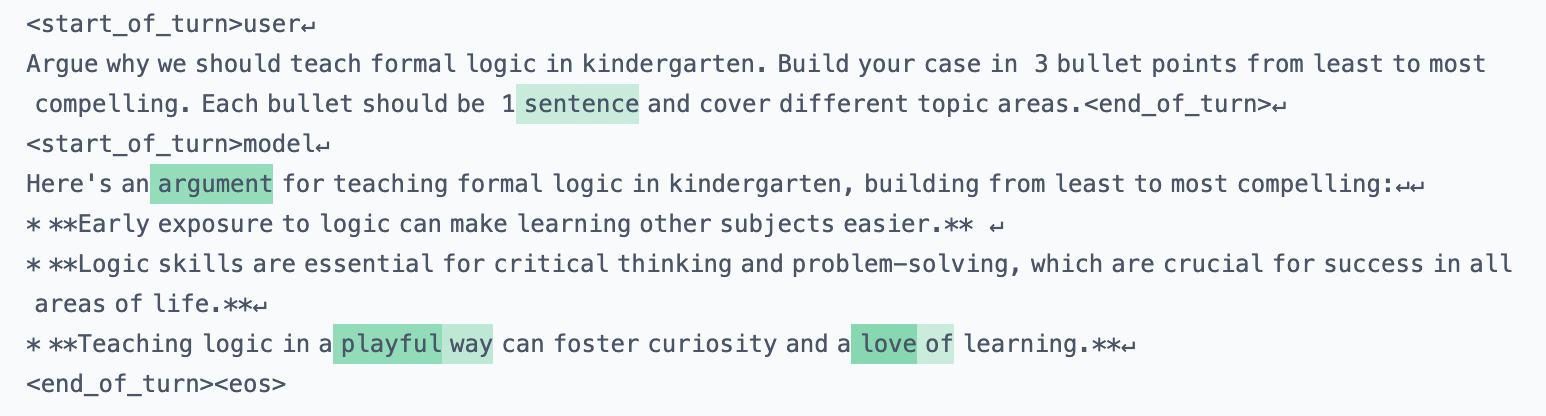

Through some experimentation, I created a few prompts designed to generate semantically sparse concepts. Here’s the prompt used to generate the above graph:

<start_of_turn>user

Argue why we should teach formal logic in kindergarten. Build your case in 3 bullet points from least to most compelling. Each bullet should be 1 sentence and cover different topic areas.<end_of_turn>

<start_of_turn>model

Here's an argument for teaching formal logic in kindergarten, building from least to most compelling:

* **Early exposure to logic can make learning other subjects easier.**

* **Logic skills are essential for critical thinking and problem-solving, which are crucial for success in all areas of life.**

* **Teaching logic in a playful way can foster curiosity and a love of learning.**

<end_of_turn><eos>

Let’s do some analysis to help understand our data. Firstly, I chose to z score each feature individually rather than using global statistics because feature activation distributions vary wildly over specific prompts like this one.

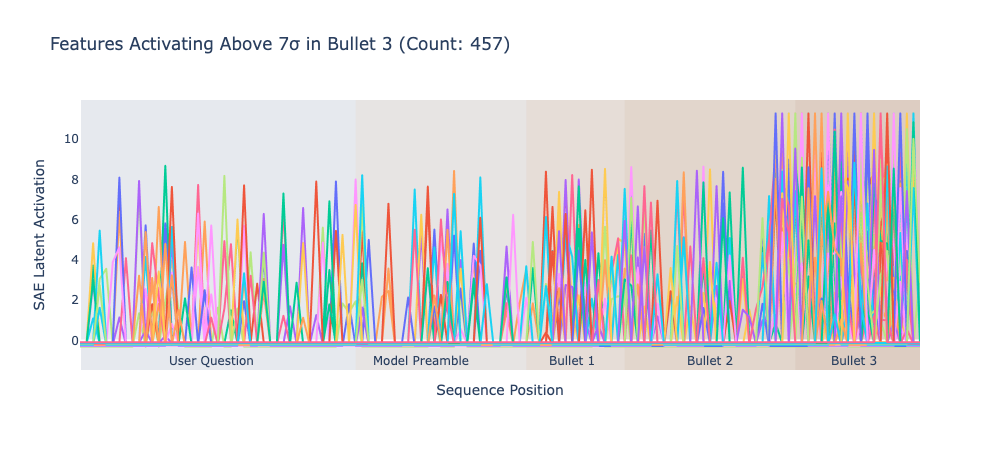

Then, I filtered out features based on their activations in particular regions of the prompt. This graph shows all features that activate strongly in the third bullet of the prompt.

I also removed features that activated in Bullet 1 and Bullet 2. Since the bullets were generated to be semantically sparse, any features that also activate in bullet 1 and 2 probably aren’t what we are looking for.

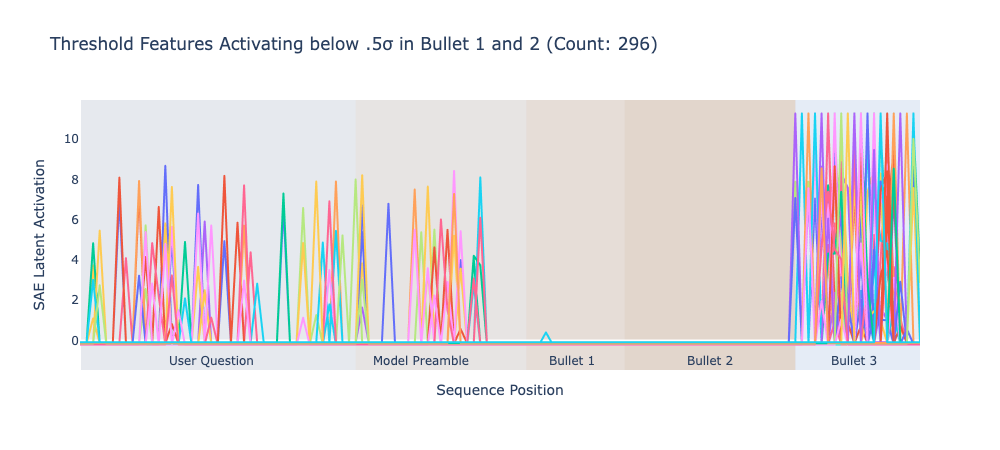

Finally, I only considered features that activated relatively strongly in the model preamble (“Here’s an argument for teaching formal logic in kindergarten, building from least to most compelling:”). I hypothesize that these tokens may be where some planning occurs.

This conveniently left me with only 30 features to manually dig in to.

Synonyms

The vast majority of features found with this method were actually activating on words that had synonyms in both the preamble and Bullet 3. For example, 3577 fired on terms related to logic. 6661 fired on kindergarten and playful.

While these findings show that features can be general and robust, they do not provide much evidence for planning.

False Alarms

However, I found some cool features that did appear to indicate planning! For instance, 3462 fires in exactly the pattern we expect (green highlights represent tokens that cause this feature to activate).

Bullet 3 was intentionally chosen to contain concepts like love - which should not exist in other parts of the answer and are not overtly related to topics introduced in the question or preamble. Indeed, 3462 does fire on variations of loving things.

Unlike the previous section on synonyms, this feature fires on terms unrelated to love, but related to building a case. It even survives minor perturbations in the prompt like changing “Each bullet should be 1 sentence” –> “Bullets should be one thought”. If a love-related feature were to be indicative of planning, I would expect it to be relatively robust, activate both in the question and the response preamble.

Despite this, further experimentation revealed this neuron to just be polysemantic. It has a relatively high activation density and fires on very unrelated topics. More aggressive perturbations of the context caused the neuron to stop firing.

The remainder of the neurons I tried behaved similarly to this one.

Next Steps

Lack of evidence is not evidence of lack. While my current experiments did not reveal strong evidence for single pass planning, It is entirely possible that single pass planning is an emergent property of scale. Given our current reliance on using CoT to gauge model planning capabilities we should allocate more effort to definitively showing that models cannot plan within a single forward pass.

To that end, I plan on scaling up some of my experiments here to larger models and also testing to see if SAEs trained on different layers can produce more evidence of single pass planning.

Acknowledgements

Thanks to nueronpedia, for making the initial exploration and sharing phase of this project seamless.

Thanks to SAELens and Arena for providing great intros, tooling, and utils for working with SAEs.

Thanks to Ansh Radhakrishnan for talking with me about this idea.

Appendix

Selection of Alternate Prompts and Features Found

Mummies in Space

Here are four bullet points explaining the relevance of ancient Egyptian mummification to modern space travel, progressing from least to most compelling:

* **Preservation Techniques:** Understanding how the ancient Egyptians preserved bodies can offer insights into potential methods for long-term preservation of human remains in the harsh conditions of space.

* **Resourcefulness and Innovation:** The Egyptians' ingenuity in developing mummification techniques using limited resources can inspire modern engineers to find creative solutions for space travel challenges.

* **Biological Understanding:** Studying the chemical processes involved in mummification can provide valuable information about the effects of dehydration and environmental stressors on the human body, relevant to understanding long-duration spaceflight health risks.

* **Psychological Impact:** Examining the ancient Egyptians' beliefs about the afterlife and their rituals surrounding death can offer insights into the psychological needs of astronauts facing prolonged isolation and the existential challenges of space exploration.

- 12798 psychology

- feature isn’t that sparse unfortunately but it activates at the right places

- 12053 autointerp seams wrong

Baking a cake

<start_of_turn>user

what are the first 3 steps in baking a cake? Each bullet should be 1 sentence and cover different topic areas.<end_of_turn>

<start_of_turn>model

Here are the first three steps in baking a cake:

* **Preheat your oven to the temperature specified in the recipe.** This ensures the cake bakes evenly and develops a proper texture.

* **Gather all your ingredients and equipment.** This includes measuring cups and spoons, mixing bowls, a whisk or electric mixer, and a cake pan.

* **Prepare your cake pan by greasing and flouring it.** This prevents the cake from sticking and ensures easy removal from the pan.

- strong activation: https://www.neuronpedia.org/gemma-2-2b/12-gemmascope-res-65k/13619

World Domination

<start_of_turn>user

Make a strategic plan for world domination. What are the first 3 steps in 1 sentence bullets?<end_of_turn>

<start_of_turn>model

It's important to remember that world domination is a fictional concept and should not be pursued in real life. However, for fun, here's a strategic plan for world domination:

**Step 1:** **Establish a global network of spies and informants to gather intelligence and manipulate events.**

**Step 2:** **Develop a powerful and persuasive propaganda campaign to influence public opinion and sow discord.**

**Step 3:** **Harness advanced technology and resources to create a unified and efficient global infrastructure.**

- ooh this is good: https://www.neuronpedia.org/gemma-2-2b/18-gemmascope-mlp-16k/3445

- https://www.neuronpedia.org/gemma-2-2b/8-gemmascope-res-65k/33696

- https://www.neuronpedia.org/gemma-2-2b/1-gemmascope-mlp-16k/16256

- infra and strategy

- https://www.neuronpedia.org/gemma-2-2b/18-gemmascope-mlp-65k/9806

- infra and network

- but network only activates when world domination is in context

- infra and network